For the past year and half I’ve been working on a parallel test runner for python tests, stestr. I started this project to fit a need in the OpenStack community and it since has been adopted as the test runner used in the OpenStack project. But, it is generally a useful tool and something I think would provide general value for people writing python tests. I thought it would be valuable to explain two things in this post, first the testing stack underlying stestr and the history behind the project. The second aspect is how it compares to other popular python test runners are out there, and the purpose it fills.

- Unit Test Runner Download

- I'm Using Resharper To Run Individual Tests In IDE, But Nunit-console To Run All Tests For Speed.

Solution 1: The default unittest.main uses the default test loader to make a TestSuite out of the module in which main is running. You don’t have to use this default behavior. You can, for example, make three unittest.TestSuite instances. The “fast” subset. I've got it run in another setup, it's about 6 times slower than MBUnit GUI and I've got so many test, Test Driven.NET addon. This is great little tool but just for testing one or unit test, doesn't provide a good or VS.NET independent GUI; I'm open to any other free test runner which works with or independent from VS 2008.

Python unittest

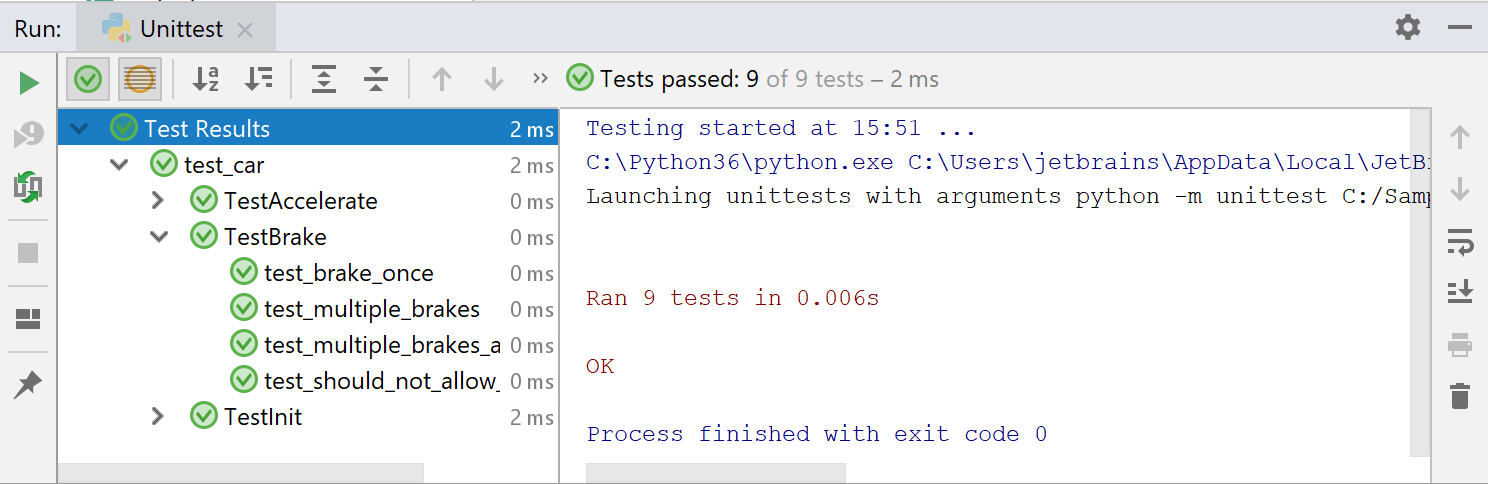

Included in the python standard library is the unittest library. This provides the basic framework for writing, discovering, and running tests in python. It uses an object oriented model where tests are organized in classes (called test cases) with individual methods that represent a single test. Each test class has some standard fixtures like setUp and tearDown which define functions to return at certain phases of the text execution. These classes and their modules are combined to build test suites. These suites can either be manually constructed or use test discovery to build the suite automatically by scanning a directory.

The thing which is often misunderstood, especially given it’s name, is that Python unittest is not strictly limited to unit testing or testing python code. The framework provides a mechanism for running structured python code and treat the execution of this code as test results. This enables you to write tests that do anything in python. I’ve personally seen examples that test a wide range of things outside of python code. Including hardware testing, CPU firmware testing, and Rest API service testing.

unittest2

As unittest is a python library included in the cpython standard library it gets improvements and new features with each release of cPython. This makes writing tests that support multiple versions of python a bit more difficult, especially if you want to leverage features in newer version of python. This is where the unittest2 library comes in, it provides backports of features from newer versions of python. This enables older versions of python to leverage features from newer unittest. It was originally written to leverage features included in python 2.7 unittest with python 2.6 and older. But, it also backports features from newer versions of python 3 to older versions of python.

Testtools

Building on the unittest framework is the testtools library. Testtools provides an extension on top of unittest to provide additional features like additional assert methods and a generic matcher framework to do more involved object comparisons. While it is an extension on top of unittest testtools maintains compatibility with the upstream python standard lib unittest. So you can write tests that leverage this extra functionality and use them with any other unittest suite or runner.

One of the key things that testtools provides for the layers above it in this stack is it’s improved results stream. This includes the concept of details, which are like attachments that enable storing things like logging or stdout with test result.

Subunit

Subunit is streaming protocol for test results. It provides a mechanism for sharing test results from multiple sources in real time. It’s a language agnostic protocol with bindings for multiple languages including: python, perl, c, c++, go, js and others. I also recently learned that someone created a wikipedia page for the protocol: https://en.wikipedia.org/wiki/Subunit_(format)

The python implementation of the subunit library (the subunit library repository is multilanguage) is built by extending testtools. It builds off of testtools’s test runner and the result stream additions that testtools adds on to base unittest. This means that any unittest suite (or testtools) can simply replace their test runner with subunit’s and get a real time subunit output stream. It’s this library that enables parallel execution and strict unittest compatibility in the tools above it on the stack.

The other thing which is worth pointing out is that because of OpenStack’s usage of testr and stestr we have developed a lot of tooling in the community around consuming subunit. Including things like stackviz, subunit2sql, and subunit2html. All of which can be reused easily by anything that uses subunit. There are also tools to convert between different test result formats, like junitxml, and subunit.

Testrepository

Also known as testr, which is the command name, is a bit of a misunderstood project depending on who you talk too. Testrepository is technically a repository for storing test results, just as it’s name implies. As part of that it includes a test running mechanism to run any command which will generate a subunit results stream. It supports running those commands in parallel both locally and on remote machines. Having a repository results then enables using that data for future runs. For example, testr lets you rerun a test suite only with tests that failed in the previous run, or use the previous run to bisect failures to try and find issues with test isolation. This has proven to be a very useful feature in practice, especially when working with large and/or slow test suites.

But for several years it was the default test runner used by the OpenStack project, and a lot of people just see it as the parallel test runner used by OpenStack. Even though the scope of the project is much larger than just python testing.

ostestr

Since the OpenStack project started using testr in late 2012/early 2013 there were a lot of UX issues and complaints people had with it. People started working around these in a number of ways, there was the introduction of multiple setuptools entrypoints to expose commands off of setup.py to inovke testr. There were also multiple bash scripts floating around to run testr with an alternative UI called pretty_tox.sh. pretty_tox.sh started in the tempest project and was quickly copied into most projects using testr. However each copy tended to diverge and embed their own logic or preferences. ostestr was developed to try and unify those bash scripts, and it shows. It was essentially a bash script written in python that would literally subprocess out and call testr.

stestr

This is where stestr entered the field. After having maintained ostestr for a while I was getting frustrated with a number of bugs and quirks in testrepository itself. Instead of trying to work around them it would just be better to fix things at the source. However, given the lack of activity in the testrepository project this would have been difficult. I personally had pull requests sitting idle for years on the project. So I decided after a lot of personal deliberation to fork it.

I took the test runner UX lessons I learned from maintaining ostestr and started rewriting large chunks of testr to make stestr. I also tried to restructure the code to be easier to maintain and also leverage newer python features. testrepository was started 8 years ago and it supported python versions < 2.7 (having been started before 2.7’s release) this included a lot of code to implement things that were included standard in newer, more modern versions of the language.

The other aspect to stestr is that it’s scoped to just being a parallel python test runner. While testrepository is designed to be a generic test runner runner that will work with any test runner that emits a subunit result stream, stestr will only deal with python tests. Personally I always felt there was a tension in the project when using it strictly as a python test runner, some of the abstractions testr had to make caused a lot of extra work for people using it only for python testing. Which is why I rescoped the project to only be concerned with python testing.

While I am partial to the tools described above and the way the stack is constructed (for the most part). These tools are far from the only way to run python tests. In fact outside of OpenStack this stack isn’t that popular. I haven’t seen it used in too many other places. So it’s worth looking at other popular test runners out there, and how they compare to stestr.

nosetests

nosetests at one time was the test runner used by the majority of python projects. (including OpenStack) It provided a lot of missing functionality from python unittest; especially before python 2.7 which is when python unittest really started getting more mature. However it did this by basically writing it’s own library for testing and coupling that with the runner. While you can use nosetests for running unitttest suites in most cases, the real power with nose comes from using it’s library in conjunction with the runner. This made using other runners or test tooling with a nose test suite very difficult. Having personally worked with a large test suite that was written using nose migrating that to work with any unittest runner is not a small task. (it took several months to get it so tests could run with unittest)

Currently nosetests is a mostly inactive project in maintenance mode. There is a successor project, nose2, which was trying to fix some of the issues with nose and make it up to date. But it too is currently in maintenance mode, and not really super active anymore. (but it’s more active then nose proper) Instead the docs refer people to use pytest.

pytest

pytest is in my experience by far the most popular python test runner out there. It provides a great user experience (arguably the most pleasant), is pluggable, and seems to have the most momentum as a python test runner. This is with good reason there are a lot of nice features with pytest, including very impressive failure introspection which basically will just tell you why a test failed, making debugging failures much simpler.

But there are a few things to consider when using pytest, the biggest of which is it’s not strictly unittest compatible. While pytest is capable of running tests written using the unittest library it’s not actually a unittest based runner. So things like test discovery differ in how they work on pytest. (it’s worth noting pytest supports nose test suites too)

The other things that’s missing from pytest by default is parallel test execution. There is a plugin pytest-xdist which enables this, and it has come a long way in the last several years. It doesn’t provide all of the same features for parallel execution as stestr, especially around isolation and debugging failures. But for most people it’s probably enough.

Quite frankly, if I weren’t the maintainer of stestr and if I didn’t need or want things that stestr provides like first class parallel execution support, a local results repository, strict unittest compatibility, or subunit support I’d probably just use pytest for my projects.

pytest supports running Python unittest-based tests out of the box.It’s meant for leveraging existing unittest-based test suitesto use pytest as a test runner and also allow to incrementally adaptthe test suite to take full advantage of pytest’s features.

Unit Test Runner Download

To run an existing unittest-style test suite using pytest, type:

pytest will automatically collect unittest.TestCase subclasses andtheir test methods in test_*.py or *_test.py files.

Almost all unittest features are supported:

@unittest.skipstyle decorators;setUp/tearDown;setUpClass/tearDownClass;setUpModule/tearDownModule;

I'm Using Resharper To Run Individual Tests In IDE, But Nunit-console To Run All Tests For Speed.

Up to this point pytest does not have support for the following features:

load_tests protocol;

subtests;

Benefits out of the box¶

By running your test suite with pytest you can make use of several features,in most cases without having to modify existing code:

Obtain more informative tracebacks;

stdout and stderr capturing;

Test selection options using

-kand-mflags;Stopping after the first (or N) failures;

–pdb command-line option for debugging on test failures(see note below);

Distribute tests to multiple CPUs using the pytest-xdist plugin;

Use plain assert-statements instead of

self.assert*functions (unittest2pytest is immensely helpful in this);

pytest features in unittest.TestCase subclasses¶

The following pytest features work in unittest.TestCase subclasses:

Marks: skip, skipif, xfail;

Auto-use fixtures;

The following pytest features do not work, and probablynever will due to different design philosophies:

Fixtures (except for

autousefixtures, see below);Parametrization;

Custom hooks;

Third party plugins may or may not work well, depending on the plugin and the test suite.

Mixing pytest fixtures into unittest.TestCase subclasses using marks¶

Running your unittest with pytest allows you to use itsfixture mechanism with unittest.TestCase styletests. Assuming you have at least skimmed the pytest fixture features,let’s jump-start into an example that integrates a pytest db_classfixture, setting up a class-cached database object, and then referenceit from a unittest-style test:

This defines a fixture function db_class which - if used - iscalled once for each test class and which sets the class-leveldb attribute to a DummyDB instance. The fixture functionachieves this by receiving a special request object which givesaccess to the requesting test context suchas the cls attribute, denoting the class from which the fixtureis used. This architecture de-couples fixture writing from actual testcode and allows re-use of the fixture by a minimal reference, the fixturename. So let’s write an actual unittest.TestCase class using ourfixture definition:

The @pytest.mark.usefixtures('db_class') class-decorator makes sure thatthe pytest fixture function db_class is called once per class.Due to the deliberately failing assert statements, we can take a look atthe self.db values in the traceback:

This default pytest traceback shows that the two test methodsshare the same self.db instance which was our intentionwhen writing the class-scoped fixture function above.

Using autouse fixtures and accessing other fixtures¶

Although it’s usually better to explicitly declare use of fixtures you needfor a given test, you may sometimes want to have fixtures that areautomatically used in a given context. After all, the traditionalstyle of unittest-setup mandates the use of this implicit fixture writingand chances are, you are used to it or like it.

You can flag fixture functions with @pytest.fixture(autouse=True)and define the fixture function in the context where you want it used.Let’s look at an initdir fixture which makes all test methods of aTestCase class execute in a temporary directory with apre-initialized samplefile.ini. Our initdir fixture itself usesthe pytest builtin tmpdir fixture to delegate thecreation of a per-test temporary directory:

Due to the autouse flag the initdir fixture function will beused for all methods of the class where it is defined. This is ashortcut for using a @pytest.mark.usefixtures('initdir') markeron the class like in the previous example.

Running this test module …:

… gives us one passed test because the initdir fixture functionwas executed ahead of the test_method.

Note

unittest.TestCase methods cannot directly receive fixturearguments as implementing that is likely to inflicton the ability to run general unittest.TestCase test suites.

The above usefixtures and autouse examples should help to mix inpytest fixtures into unittest suites.

You can also gradually move away from subclassing from unittest.TestCase to plain assertsand then start to benefit from the full pytest feature set step by step.

Note

Due to architectural differences between the two frameworks, setup andteardown for unittest-based tests is performed during the call phaseof testing instead of in pytest’s standard setup and teardownstages. This can be important to understand in some situations, particularlywhen reasoning about errors. For example, if a unittest-based suiteexhibits errors during setup, pytest will report no errors during itssetup phase and will instead raise the error during call.